<English cross post with my DCP blog>

When debating capacity management in the datacenter the amount of watts consumed per rack is always a hot topic.

Years ago we could get away with building datacenters that supported 1 or 2 kW per rack in cooling and energy supply. The last few years demand for racks around 5-7kW seems the standard. Five years ago I witnessed the introduction of blade servers first hand. This generated much debate in the datacenter industry with some claiming we would all have 20+ kW racks in 5 years. This never happened… well at least not on a massive scale…

So what is the trend in energy consumption on a rack basis ?

Readers of my Dutch datacenter blog know I have been watching and trending energy development in the server and storage industry for a long time. To update my trend analysis I wanted to start with a consumption trend for the last 10 years. I could use the hardware spec’s found on the internet for servers but most published energy consumption values are ‘name-plate ratings’. Green Grid’s whitepaper #23 states correctly:

Regardless of how they are chosen, nameplate values are generally accepted as representing power consumption levels that exceed actual power consumption of equipment under normal usage. Therefore, these over-inflated values do not support realistic power prediction

I have known HP’s Proliant portfolio for a long time and successfully used their HP Power Calculator tools (now: HP Power Advisor). They display the nameplate values as well as power used at different utilizations and I know from experience these values are pretty accurate. So; that seems as good starting point as any…

I decide to go for 3 form factors:

..minimalist server designs that resemble blades in that they have skinny form factors but they take out all the extra stuff that hyperscale Web companies like Google and Amazon don’t want in their infrastructure machines because they have resiliency and scale built into their software stack and have redundant hardware and data throughout their clusters….These density-optimized machines usually put four server nodes in a 2U rack chassis or sometimes up to a dozen nodes in a 4U chassis and have processors, memory, a few disks, and some network ports and nothing else per node.[They may include low-power microprocessors]

For the 1U server I selected the HP DL360. A well know mainstream ‘pizzabox’ server. For the blade servers I selected the HP BL20p (p-class) and HP BL460c (c-class). The Density Optimized Sever could only be the recently introduced (5U) HP Moonshot.

For the server configurations guidelines:

- Single power supply (no redundancy) and platinum rated when available.

- No additional NICs or other modules.

- Always selecting the power optimized CPU and memory options when available.

- Always selecting the smallest disk. SSD when available.

- Blade servers enclosures

- Pass-through devices, no active SAN/LAN switches in the enclosures

- No redundancy and onboard management devices.

- C7000 for c-class servers

- Converted the blade chassis power consumption, fully loaded with the calculated server, back to power per 1U.

- Used the ‘released’ date of the server type found in the Quickspec documentation.

- Collected data of server utilization at 100%, 80%, 50%. All converted to the usage at 1U for trend analysis.

This resulted in the following table:

|

Server type

|

Year

|

CPU Core count

|

CPU type

|

RAM (GB)

|

HD (GB)

|

100% Util (Watt for 1U)

|

80% Util (Watt for 1U)

|

50% Util (Watt for 1U)

|

|

HP BL20p

|

2002

|

1

|

2x Intel PIII

|

4

|

2x 36

|

328.00

|

|

|

|

HP DL360

|

2003

|

1

|

2x Intel PII

|

4

|

2x 18

|

176.00

|

|

|

|

HP DL360G3

|

2004

|

1

|

2x Intel Xeon 2,8Ghz

|

8

|

2x 36

|

360.00

|

|

|

|

HP BL20pG4

|

2006

|

1

|

2x Intel Xeon 5110

|

8

|

2x 36

|

400.00

|

&n

bsp; |

|

|

HP BL460c G1

|

2006

|

4

|

2x Intel L5320

|

8

|

2x 36

|

397.60

|

368.80

|

325.90

|

|

HP DL360G5

|

2008

|

2

|

2x Intel L5240

|

8

|

2x 36

|

238.00

|

226.00

|

208.00

|

|

HP BL460c G5

|

2009

|

4

|

2x Intel L5430

|

8

|

2x 36

|

368.40

|

334.40

|

283.80

|

|

HP DL360G7

|

2011

|

4

|

2x Intel L5630

|

8

|

2x 60 SSD

|

157.00

|

145.00

|

128.00

|

|

HP BL460c G7

|

2011

|

6

|

2x Intel L5640

|

8

|

2x 120 SSD

|

354.40

|

323.90

|

278.40

|

|

HP BL460c Gen8

|

2012

|

6

|

2x Intel 2630L

|

8

|

2x 100 SSD

|

271.20

|

239.10

|

190.60

|

|

HP DL360e Gen8*

|

2013

|

6

|

2x Intel 2430L

|

8

|

2x 100 SSD

|

170.00

|

146.00

|

113.00

|

|

HP DL360p Gen8*

|

2013

|

6

|

2x Intel 2630L

|

8

|

2x 100 SSD

|

252.00

|

212.00

|

153.00

|

|

HP Moonshot

|

2013

|

2

|

Intel Atom S1260

|

8

|

1x 500

|

177.20

|

172.40

|

165.20

|

* HP split the DL360 in to a stripped down version (the ‘e’) and an extended version (the ‘p’)

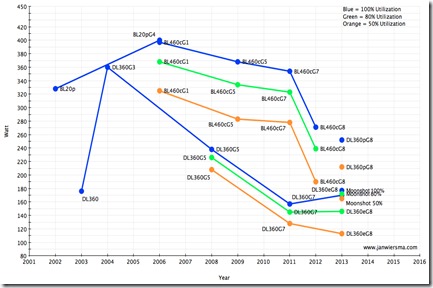

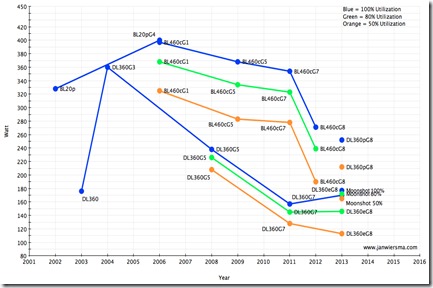

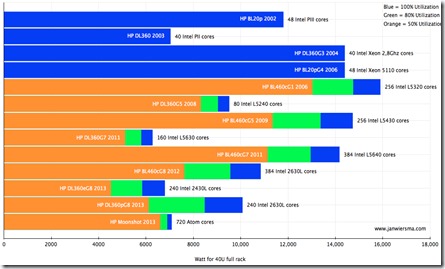

And a nice graph (click for larger one):

The graph shows an interesting peak around 2004-2006. After that the power consumption declined. This is mostly due to power optimized CPU and memory modules. The introduction of Solid State Disks (SSD) is also a big contributor.

Obviously people will argue that:

- the performance for most systems is task specific

- and blades provide higher density (more CPU cores) per rack,

- and some systems provide more performance and maybe more performance/Watt,

- etc…

Well; datacenter facility guys couldn’t care less about those arguments. For them it’s about the power per 1U or the power per rack and its trend.

With a depreciation time of 10-15years on facility equipment, the datacenter needs to support many IT refresh cycles. IT guys getting faster CPU’s, memory and bigger disks is really nice and it’s even better if the performance/watt ratio is great… but if the overall rack density goes up, than facilities needs to supp

ort it.

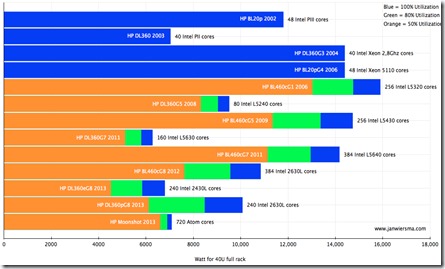

To provide more perspective on the density of the CPU/rack, I plotted the amount of CPU cores at a 40U filled rack vs. total power at 40U:

Still impressive numbers: between 240 and 720 CPU cores in 40U of modern day equipment.

Next I wanted to test my hypotheses, so I looked at a active 10.000+ server deployment consisting of 1-10 year old servers from Dell/IBM/HP/SuperMicro. I ranked them in age groups 2003-2013, sorted the form factors 1U Rackmount, Blades and Density Optimized. I selected systems with roughly the same hardware config (2 CPU, 2 HD, 8GB RAM). For most age groups the actual power consumption (@ 100,80,50%) seemed off by 10%-15% but the trend remained the same, especially among form factors.

It also confirmed that after the drop, due to energy optimized components and SSD, the power consumption per U is now rising slightly again.

Density in general seemed to rise with lots more CPU cores per rack, but at a higher power consumption cost on a per rack basis.

Let’s take out the Cristal ball

The price of compute & storage continues to drop, especially if you look at Amazon and Google.

Google and Microsoft have consistently been dropping prices over the past several months. In November, Google dropped storage prices by 20 percent.

For AWS, the price drops are consistent with its strategy. AWS believes it can use its scale, purchasing power and deeper efficiencies in the management of its infrastructure to continue dropping prices. [Techcrunch]

If you follow Jevons Paradox then this will lead to more compute and storage consumption.

All this compute and storage capacity still needs to be provisioned in datacenters around the world. The last time IT experienced growth pain at the intersection between IT & Facility it accelerated the development of blade servers to optimize physical space used. (that was a bad cure for some… but besides the point now..) The current rapid growth accelerated the development of Density Optimized servers that strike a better balance between performance, physical space and energy usage. All major vendors and projects like Open Compute are working on this with a 66.4% year over year in 4Q12 growth in revenue.

Blades continue to get more market share also and they now account for 16.3% of total server revenue;

"Both types of modular form factors outperformed the overall server market, indicating customers are increasingly favoring specialization in their server designs" said Jed Scaramella, IDC research manager, Enterprise Servers "Density Optimized servers were positively impacted by the growth of service providers in the market. In addition to HPC, Cloud and IT service providers favor the highly efficient and scalable design of Density Optimized servers. Blade servers are being leveraged in enterprises’ virtualized and private cloud environments. IDC is observing an increased interest from the market for converged systems, which use blades as the building block. Enterprise IT organizations are viewing converged systems as a method to simplify management and increase their time to value." [IDC]

With cloud providers going for Density Optimized and enterprise IT for blade servers, the market is clearly moving to optimizing rack space. We will see a steady rise in demand for kW/rack with Density Optimized already at 8-10kW/rack and blades 12-16kW/rack (@ 46U).

There will still be room in the market for the ‘normal’ rackmount server like the 1U, but the 2012 and 2013 models already show signs of a rise in watt/U for those systems also.

For the datacenter owner this will mean either supply more cooling&power to meet demand or leave racks (half) empty, if you haven’t build for these consumption values already.

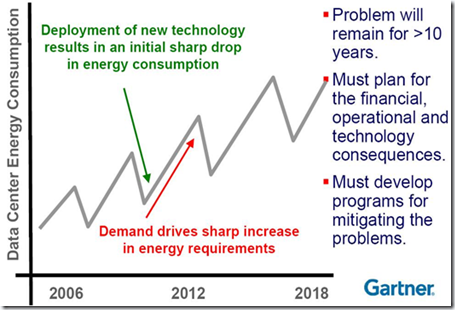

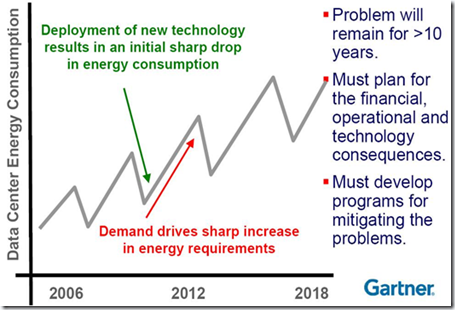

In the long run we will follow the Gartner curve from 2007:

With the market currently being in the ‘drop’ phase (a little behind on the prediction…) and moving towards the ‘increase’ phase.

More:

Density Optimized servers (aka microservers) market is booming

IDC starts tracking hyperscale server market

Documentation and disclaimer on the HP Power Advisor

It’s the weekend before the holiday season and just like last year I find my self at an US airport making my way home… just in time for Christmas.

It’s the weekend before the holiday season and just like last year I find my self at an US airport making my way home… just in time for Christmas.