Where is the open datacenter facility API ?

<English cross post with my DCP blog>

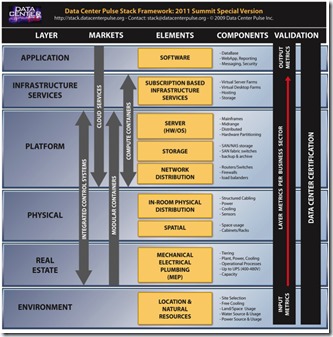

For some time the Datacenter Pulse top 10 has featured an item called ‘ Converged Infrastructure Intelligence‘. The 2012 presentation mentioned:

Treat the DC infrastructure as an IT system;

– Converge in the infrastructure instrumentation and control systems

– Connect it into the IT systems for ultimate control

Standardize connections and protocols to connect components

With datacenter infrastructure becoming a more complex system and the need for better efficiency within the whole datacenter stack, the need arises to integrate layers of the stack and make them ‘talk’ to each other.

This is shown in the DCP Stack framework with the need for ‘integrated control systems’; going up from the (facility) real-estate layer to the (IT) platform layer.

So if we have the ‘integrated control systems’, what would we be able to do?

We could:

- Influence behavior (can’t control what you don’t know); application developers can be given insight on their power usage when they write code for example. This is one of the needed steps for more energy efficient application programming. It will also provide more insight of the complete energy flow and more detailed measurements.

- Design for lower level TIER datacenters; when failure is imminent, IT systems can be triggered to move workloads to other datacenter locations. This can be triggered by signals from the facility equipment to the IT systems.

- Design close control cooling systems that trigger on real CPU and memory temperature and not on room level temperature sensors. This could eliminate hot spots and focus the cooling energy consumption on the spots where it is really needed. It could even make the cooling system aware of oncoming throttle up from IT systems.

- Optimize datacenters for smart grid. The increase of sustainable power sources like wind and solar energy, increases the need for more flexibility in energy consumption. Some may think this is only the case when you introduce onsite sustainable power generation, but the energy market will be affected by the general availability of sustainable power sources also. In the end the ability to be flexible will lead to lower energy prices. Real supply and demand management in the datacenters requires integrated information and control from the facility layers and IT layers of the stack.

- …

Gap between IT and facility does not only exists between IT and facility staff but also between their information systems. Closing the gap between people and systems will make the datacenter more efficient, more reliable and opens up a whole new world of possibilities.

This all leads to something that has been on my wish list for a long, long time: the datacenter facility API (Application programming interface)

I’m aware that we have BMS systems supporting open protocols like BACnet, LonWorks and Modbus, and that is great. But they are not ‘IT ready’. I know some BMS systems support integration using XML and SOAP but that is not based on a generic ‘open standard framework’ for datacenter facilities.

So what does this API need to be ?

First it needs to be an ‘open standard’ framework; publicly available and no rights restrictions for the usage of the API framework.

This will avoid vendor lock-in. History has shown us, especially in the area of SCADA and BMS systems, that our vendors come up with many great new proprietary technologies. While I understand that the development of new technology takes time and a great deal of money, locking me in to your specific system is not acceptable anymore.

A vendor proprietary system in the co-lo and wholesale facility will lead to the lock-in of co-lo customers. This is great for the co-lo datacenter owner, but not for its customer. Datacenter owners, operators and users need to be able to move between facilities and systems.

Every vendor that uses the API framework needs to use the same routines, data structures, object classes. Standardized. And yes, I used the word ‘Standardized’. So it’s a framework we all need to agree up on.

These two sentences are the big difference between what is already available and what we actually need. It should not matter if you place your IT systems in your own datacenter or with co-lo provider X, Y, Z. The API will provide the same information structure and layout anywhere…

(While it would be good to have the BMS market disrupted by open source development, having an open standard does not mean all the surrounding software needs to be open source. Open standard does not equal open source and vice versa.)

It needs to be IT ready. An IT application developer needs to be able to talk to the API just like he would to any other IT application API; so no strange facility protocols. Talk IP. Talk SOAP or better: REST. Talk something that is easy to understand and implement for the modern day application developer.

All this openness and ease of use may be scary for vendors and even end users because many SCADA and BMS systems are famous for relying on ‘security through obscurity’. All the facility specific protocols are notoriously hard to understand and program against. So if you don’t want to lose this false sense of security as a vendor; give us a ‘read only’ API. I would be very happy with only this first step…

So what information should this API be able to feed ?

Most information would be nice to have in near real time :

- Temperature at rack level

- Temperature outside of the building

- kWh, but other energy related would be nice at rack level

- warnings / alarms at rack and facility level

- kWh price (can be pulled from the energy market, but that doesn’t include the full datacenter kWh price (like a PUE markup))

(all if and where applicable and available)

The information owner would need features like access control for rack level information exchange and be able to tweak the real time features; we don’t want to create unmanageable information streams; in security, volume and amount.

So what do you think the API should look like? What information exchange should it provide? And more importantly; who should lead the effort to create the framework? Or… do you believe the Physical Datacenter API framework is already here?

More:

Good API design by Google : http://www.youtube.com/watch?v=heh4OeB9A-c&feature=gv