The tale of Services v.s. Cloud product organizations

<This blog is background material as part of my 2017/2018 VU lecture series >

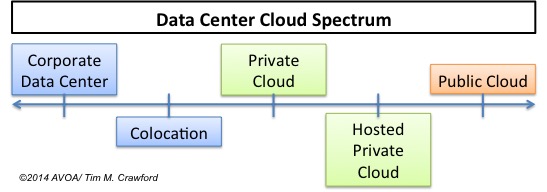

As companies transition their product delivery methodology from on-premise software to a As A Service (PAAS/SAAS) model, they are confronted with very different motions across their Sales, Marketing, Development, Services and Support organizations.

One of the examples that show the difference how ‘execution’ is done in these models, is how Services and Product is managed across the organization. For larger on-premise software companies it is not uncommon to see Professional Services (PS) bookings v.s. software bookings rates of >3, meaning that customers pay more for the implementation and assistance in software management, then the actual purchase price of the software.

The Cloud delivery model has very different PS dynamic, as Waterstone reports in their 2015 report – changing Professional Services Economics ;

“There is growing preference for Cloud- and SaaS-based solutions that, on average, have a PS attach rate around 0.5x to 1.0X (versus the 2.9x PS attach rate commonly seen with traditional licensed products).”

The analysis is not strange, as Cloud is all about providing low friction of onboarding by self-service and automation. This means getting the human out of the equation, as it’s a limiting factor in scalability and raises cost.

Cloud is all about minimizing the time from idea -to- revenue, while being able to scale rapidly and keeping cost low.

The definition of ‘the product’ in a Cloud world therefore isn’t only about the bits & bytes, but includes successful onboarding of the customer and maximizing their usage.